Related content in this Stream

Embark on an electrifying journey with VMware GreenPlum's Minor Release 6.27.0! The TMC March update ignites support for Kubernetes 1.29 and unleashes Spring Cloud Gateway Releases for an...

Revolutionize Your Data Science Experience: Elevating Data Exploration with GreenplumPython 1.1.0’s Advanced Embeddings Search in PostgreSQL and GreenplumUnlocking New Dimensions in Data Analysis...

As we stand at the threshold of a new era in data management, Greenplum continues to lead the industry with its commitment to innovation.

Author | Nihal Jain Troubleshooting and identifying supportability issues in a complex database system can be a daunting task. However, with the advent of tools like gpsupport, the process has...

This week we bring you, new TAS Releases, Tanzu Data solutions GemFire , Greenplum GA releases , Spring Product updates along with release notes , KB articles with Guidance and Troubleshooting...

6:37

6:37Hear about Greenplum's recent advancements, including AI capabilities with PG vector extension and Postgres ML, and discuss handling billions of vectors and real-time data analytics, highlighting Gree

8:03

8:03It is now official! VMware Tanzu Greenplum 7 was released on October 28, with a load of new and enhanced features. Check out this video to see how VMware Tanzu Greenplum 7 is the unified platform for

2:39

2:39VMware Tanzu Greenplum already had traditional database security in roles, permissions, and role-based access control (RBAC). Now, we introduce new features like row-level security and improved featur

5:38

5:38Check out this video to find out how VMware Tanzu Greenplum 7 makes the life of a developer much better with things like automated migration from Oracle-like databases, easier merge of data sets with

4:12

4:12How can you make a super-efficient system that can support thousands of users running millions of queries in one system? Watch this video to find out how! Learn more: https://www.vmware.com/products/g

6:00

6:00With the inclusion of pgvector and other new and improved capabilities, VMware Tanzu Greenplum 7 can support many AI use case requirements. Learn more: https://www.vmware.com/products/greenplum.html

9:00

9:00VMware Tanzu Greenplum 7 provides a lot of features and enhancements aimed at improving the user experience—everything from the improved management and handling of statistics to the on-the-fly, non-di

5:54

5:54Think about this... A massively parallel processing (MPP) analytics platform with blazing performance just got faster! Watch the video to find out how! Learn more about Tanzu Greenplum: https://www.vm

3:23

3:23The enterprise analytics and data warehouse platform based on open source Postgres just got an upgrade. Now, VMware Tanzu Greenplum 7 is based on the more modern version of Postgres 12. Learn more: h

2:44

2:44VMware Tanzu Greenplum is not just a data silo but instead integrates with a large ecosystem of data products, making it a unified data and analytics platform. Learn more: https://www.vmware.com/produ

13:12

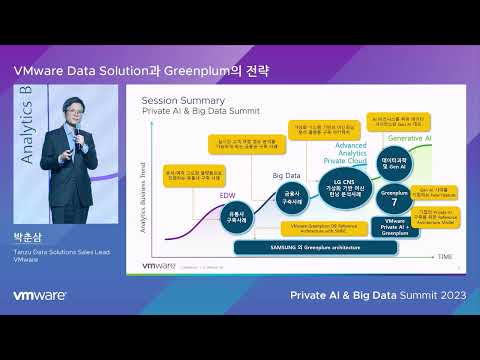

13:12VMware Private AI & Big Data Summit 2023 Speaker: 박춘삼, Head of Data Solutions Sales, VMware APJ

19:52

19:52VMware Private AI & Big Data Summit 2023 Speaker: 김병수 파트장, Sr.Director - Head of Samsung Memory Research Center & Head of OCP (Open Compute Project) Experience Center Korea, Samsung

28:32

28:32VMware Private AI & Big Data Summit 2023 Speaker: Ivan Novick, Director of Product Management, VMware Greenplum, VMware

Authors | Kevin Yeap & Brent Doil Introduction Greenplum Upgrade (gpupgrade) is a utility that allows in-place upgrades from Greenplum Database (GPDB) 5.x version to 6.x version. Version 1.7.0...