When humans struggle to identify fake news, how can computers and technical solutions be used to help?

Computer researchers at the University of Washington recently published a video of President Barack Obama speaking. Except he’s not. The researchers built algorithms, to be published in August, that slice existing audio and high-def video and remake fake videos with the pieces — and they look completely real. The former president appears to say things he never did.

“The idea is to use the technology for better communication between people,” co-author and professor, Ira Kemelmacher-Shlizerman told The Atlantic. However, some people on the internet may take a different spin. Such software could herald another chapter in the fast-rising sea levels of fake news. In March, World Wide Web creator Tim Berners-Lee said that “fake news” has become one of the web’s “greatest threats to serve humanity.”

The Internet has long been a place for misinformation and trickery. With every emerging platform for better communication comes some shadow side with malicious intentions. Every website — whether selling news, trading services for fees, or providing information — require verification and reassurance in some form. Most of these services have lost and regained consumers’ confidence at some point. Each time issues arise, designers work alongside engineers, user experience designers, and product managers to re-establish their customers’ trust in the product.

“How are emerging technical solutions, like recognition algorithms that analyze sourcing, headline syntax, and article text, supposed to identify fake stories when humans struggle?”

We’ve seen this before. When smartphones took off, Apple took measures in both their App Store and in the press to ensure the to public that applications were secure and trustworthy. Android celebrated its “open” development platform — yet suffered early on because applications were more fraught with malware and scams. In 2014 a developer sold over 30,000 copies of an antivirus application that actually did nothing. Since then the “Android police” have worked to crack down on bad apps by better scans of code, permission certificates, and human intervention — and while scams still happen, some advocates now argue Android’s apps are safer than iOS.

When companies like Airbnb, VRBO, Uber, and Lyft first started having people rent their homes and cars, hosts, guests, drivers, and passengers cast wary eyes at the transaction and safety. But with feedback loops that allow everyone to rate their experience, and companies performing background checks, providing insurance policies, and overall better user experiences, ride- and home-renting firms have grown into bedrock applications for people’s livelihoods. Concerns remain, but every year more Americans transact with these services.

Regaining Control of the “News” Frontier

Today, “weaponized misinformation” spread via major social networks function as the latest entrant in the continually-evolving faith people put in software. It’s now the turn of social platforms and news organizations to rethink their design and recapture their readers trust. But, how? These solutions are still taking shape.

There are (at least) three basic definitions of fake news. The first equates to established media companies, often known for accurate reporting, portraying stories in ways detractors claim as simply “fake”. The second consists of media organizations reporting, what many would call, selective facts to support a narrative. Some call this partisan news; others call it fake news. Regardless, these first two have fed into the chorus of distrust in large media organizations. The third is straight lies. Hoaxes. Baseless propaganda.

But defining fake news is easier than identifying it. How are emerging technical solutions, like recognition algorithms that analyze sourcing, headline syntax, and article text, supposed to identify fake stories when humans struggle?

Sixty-two percent of adult Americans get news from social media — by far the biggest vehicle for spreading false stories. Fake news usually spreads by bots flooding social channels so a story appears trending (to the social media algorithms) when it’s not. And while Facebook is making public steps to regain trust — such as restricting users’ ability to alter posts’ titles and descriptions — it remains a closed ecosystem. No one else has control over what’s algorithmically deemed “important,” except their engineers. That prompted Facebook to hire thousands of humans and to work with journalist collectives like Correctiv — all which manually check stories for veracity. But the problem’s far from solved.

As with any societal woe, start-ups and academic are emerging to take on fake news with a variety of algorithmic approaches. Grapple, who was recently accepted to IBM’s Global Entrepreneur Program, uses a bot to trace keywords, figures, and sources backwards to their points of origin. The “Fake News Challenge” has released initial results from three winning teams: Cisco cybersecurity division Talos Intelligence, TU Darmstadt, in Germany, and University College London, all who built software that can identify whether two or more articles are on the same topic, and if they are, whether they agree, disagree, or just discuss it. But these tools are still in their infancy.

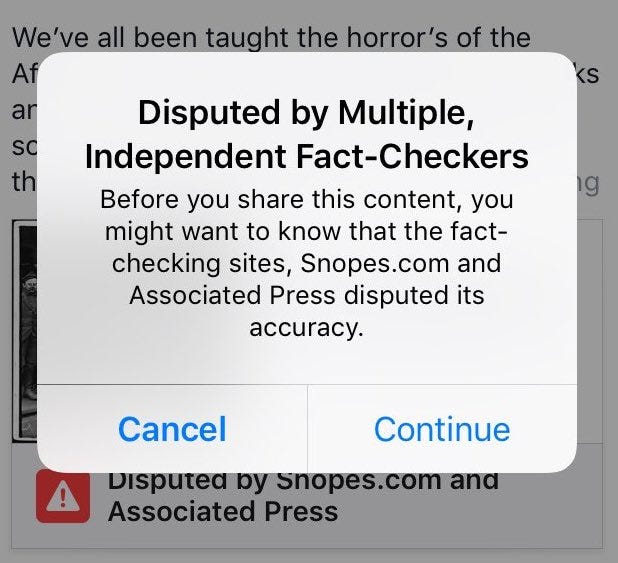

Then there are people like New York Magazine journalist Brian Feldman who are taking the matter into their own hands. Feldman built a browser extension that warned readers when they were loading suspicious news sites — according to a list compiled by media professor Melissa Zimdars. Users would receive a pop-up that said “Warning! The articles on this website are likely to be fake, false or misleading!” But the extension’s install numbers were relatively low. He’s open sourced the code so anyone can add to it. “It was mostly to prove a point,” he says.

The strongest measure today remains outside the realm of software. Publishers and social networks are asking readers to decide what is fake. Facebook issued guidelines in April regarding warning signs, such as too-catchy headlines or examining the URL closely; and this month, they launched a relevant article feature that provides readers alternative perspectives on the same news. Meanwhile, media organizations like the New York Times who are accused of a partisan slant, are allowing readers to decide by offering contrasting positions across the web. Sites like PolitiFact can issue “Pants on Fire” ratings for bogus stories they expose, but readers still have to check themselves.

“I think there’s a chance to algorithmically identify things that are more likely than not to be ‘fake news,’ but they will always work best in combination with a person with a sharp eye,” said Jay Rosen, a professor of journalism at New York University, to Wired.

As the Internet broadens the information hose, from apps to niche media, helpful and nefarious, people’s own awareness seems — for now — the best solution for maintaining truth. Just as many internet users won’t upload personal information to sites without HTTPS, the hope is that users won’t download news (if only mentally) from sites that haven’t proven trustworthy. But security can’t occur in a vacuum. Software makers once stepped in to keep people’s personal data secure. Now it seems time for them to step in and help keep the truth secure.

Change is the only constant, so individuals, institutions, and businesses must be Built to Adapt. At Pivotal, we believe change should be expected, embraced, and incorporated continuously through development and innovation, because good software is never finished.

The Design of Trust: Combating Fake News was originally published in Built to Adapt on Medium, where people are continuing the conversation by highlighting and responding to this story.